Lets learn "About web crawler system design"

In this tutorial, we'll learn about the system design for the distributed web crawler. A web crawler is also known as a spider bot. It's a framework or tool or software which is used to collect the web pages and save them in a way to easily access them. This process is known as web indexing.

Different use-cases of web crawlers

- Search Engine: E.g: whenever you search something it returns the relevant pages as we've already stored the web pages in an optimized fashion.

- Copyright violation detection: This means finding users using copyright content and sue them.

- Keyword-based finding: This is similar to the search engine which helps to find web pages based on keywords.

- Web malware detection: To detect if someone has created similar pages for phishing attacks. Like amazon could be doing web scrawling to search web pages similar to its own web page that some hacker is using for phishing.

- Web analysis: Used by some companies/data scientists to characterize the feature of web application.

- Data mining: Mostly similar to web analysis and convert raw information to useful information.

Design

Features we'll be supporting and discussing in this tutorial

- Politeness/crawl rate

- DNS Query

- Distributed Crawling

- Priority Crawling

- Duplicate detection

Scale

- We've around 2 Billion websites available on internet ( as of 2020 ).

- On an average we've 100 pages per website.

- So our target is 200Billion pages and assuming 120 Kb as average pages size, we've 24 Petabytes of data.

Goals

- Scalability

- Extensibility

- Freshness of pages

- Server side rendering

- Politeness: Crawer consume resources on destination servers and often visit sites without approval. Hence we need to be polite of destination servers.

Architecture

Let's learn about the different components individually.- Seed URLs: These are initiator URLs that need to be provided to start crawling. One of the strategies here is to provide a few URLs from all the sectors including education, railways, movies, etc.

- URL Frontier: It's basically a priority queue to which seed URLs are provided. It has different built-in features including politeness, priority, etc.

- Fetcher & Renderer: This is built using the threads or anything which provides concurrency. These components can be scaled by adding more and more machines.

- Fetching: It fetches the content of URLs. Basically, we have workers doing fetching + rendering per worker. When a worker is free it asks the URL frontier to provide the next URL to crawl. Then it uses the DNS resolver to resolve the IP address of the host. Why internal DNS? Because external DNS resolver takes some time to resolve the address. Internal DNS fastens the resolution part. Another thing is DNS resolver is single-threaded which blocks the request until the current request is being processed that all slows down things.

- Rendering: Why rendering is required because earlier fetching the content was sufficient before reactJS/angularJS because earlier the content was static. But with these high-level languages, content is dynamic because they make multiple ajax calls to get all the content property. Hence we need to render the page after fetching.

- NOTE: We need to set the USER-AGENT field in the requests header made from the crawler. Like, google web crawler set it to GOOGLE BOT. The advantage of this is that website admins configure webserver behave differently for different search engines.

- Caching the content: One of the executors get the content we need to cache. That is when we use Reddis and also it is persisted to storage. Why caching is required? For faster access and in permanent storage we can compress it.

- Queue (Response): Now after the executor processes the data and saves it to Redis, it also sends a message to the response queue containing the pointers to data persisted in Redis. The consumer of this response queue is the list of extractors ( we can add as many it is required ).

- Extractors: Extractors get the data from Redis and perform the extractions.

- URL Extractors: The URL extractor extracts the URLs from the page.

- After extracting the URLs, it maps the URLs to its domain. Like mail.google.com, keep.google.com, docs.google.com are mapped to the google.com domain.

- Normalize the URLs to make them follow standard conventions.

- Duplicate Detectors: Duplicate detectors detect duplicates and exclude them.

- URL Filter: It filters the URL based on the type of crawler. Like if a crawler is configured to use mp3 content, the URL filter will ignore other content.

- URL Loader | Is Crawled: Once the URL filtering is done, we'll check if the URL is already crawled or not. To check if the URL is crawled or not we'll use BLOOMFILTERS for optimization. Then unique URLs are again sent to the URL frontier.

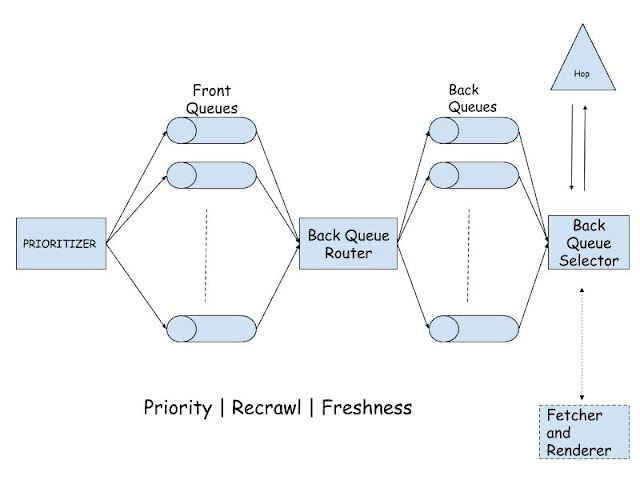

System design for URL Frontier

URL Frontier ensures the following things:

- Priority - For some of the websites, we need to crawl frequently because the content keeps updating for those. For e.g news websites, or some sports website. For such websites we set priority to high.

- Recrawl - As mentioned above there are some websites which requires recrawl.

- Freshness - Recrawling websites ensures the freshness of the content.

- Politeness - This is a very important point. Any crawler should be polite on any server to ensure the crawling on that servers should not affect end users. Politeness can be in terms of visiting a particular domain or creating a limit connections to particular server.

Different components of URL Frontier

Now we've discussed the different features of URL Frontier, its time to see how different components of URL Frontier ensures these features.

1. Front Queues: These are FIFO queues which maintains the list of URLs to crawl. No. of these queues depend on the range of priorities we have. If we have priorities ranging from 0-999, the number of such queues will be 1000.

2. Prioritizer: This component is responsible for assigning the priority to the incoming URLs. Based on the priority of the URL it sends to the corresponding queue in the front queues list. How prioritizer gives the priority? Basically we checks the older data to figure out how frequently the data had changed for given URL. More frequently the data is changing, more is the priority for that URL.

3. Back queue router: From all the front queues, data goes to the back queues via back queue routers. Basically back queue router ensures that none of the back queues remain empty by continuous polling. So this component pulls the URLs from front queues and pushes them to the back queues.

4. Back queues: Back queues like front queues are also FIFO queues. The number of back queues we have is equal to the number of executors. Every back queue contains the URLs for particular domain.

5. Back queue selector: Back queue selector selects the URL from back queues and send it to the fetcher + renderer. Back queue selector ensures the crawler should be polite against any servers i.e it shouldn't be hitting servers of particular domains much frequently.

Detecting updates and Duplicates

Now let talk about the different types of URL Extractors.

1. Updates Detector: This component detects how frequently the web page is updating which will be used by the prioritizer to set the priority of the URL. How it detects updates? Shall we need to download the page and match with the old content? The answer is NO!. What it does is make a HEAD request to the URL which returns the last modified time. And we simply match the last modified time to detect if the page is updated or not.

2. Duplicates Detector: Statistics show that 30% of the pages are duplicates. So detecting duplicates is very important as it can enhance the performance of the web crawler. Now how to detect duplicates?

a. Brute force method: This method includes comparing each word of the page. This is inefficient, right? This will not work on scale.

b. Hashing: This method includes calculating the hash (like md5) of each page and compares its value. While storing the page in Redis we can also store its md5 and eliminate the duplicates.

But this method also won't work well on the scale because two pages will be having the same hash only if each and every word is the same which is not the case in reality.

c. Probabilistic Hashing: There are some algorithms that do the probabilistic matching. These algorithm includes 1. Min hash, 2. Sim hash, 3. Fuzzy hash. These algorithms will help in doing the probabilistic matching. We will dive into the working of these algorithms in a separate tutorial.

HOPE YOU LEARNT SOMETHING NEW IN THIS TUTORIAL. FEEL FREE TO COMMENT BELOW IN CASE OF ANY DOUBTS. AAANNNNDDDDDD.... STAY TUNED FOR MORE TUTORIALS :)

Comments

Post a Comment